Stanford CS Education Library: a 16 page introduction to the use of common Unix programming tools. Introduces the overall edit-compile-link-debug programming cycle and the explains the basic usage of...gcc, make, gdb, emacs, and the shell. The goal is to describe the major features and typical uses of the tools and show how they fit together with enough detail for basic projects. We use a version of this handout at Stanford to help our students get started building programs on Unix.

The blog provides study material for Computer Science(CS) aspirants. Mostly says "material nahi milta, padhun kahan se.", I think If you can not find content on the Internet, then you are not a CS student. Dedicated to (Prof. Rakesh Kumar, DCSA, K.U.Kurukshetra, HARYANA, INDIA)- "Ek teacher ka bahut jyada padhna, bahut jyada jaroori hota hai."

Friday 28 February 2014

A note on Binary Trees

Introduction to the basic concepts of binary trees, and works through a series of practice problems with solution code in C/C++ and Java. Binary trees have an elegant recursive pointer structure, so they are a good way to learn recursive pointer algorithms.

See: BinaryTrees.html

Or as a PDF: BinaryTrees.pdf-- same content, just in PDF format

See: TreeListRecursion.html

Or as a PDF: TreeListRecursion.pdf -- the same content, but in PDF, so both the text and images are in the one file

Big Thanks to Stanford university for providing this kind of material.

See: BinaryTrees.html

Or as a PDF: BinaryTrees.pdf-- same content, just in PDF format

The Great Tree-List Recursion Problem

Stanford CS Education Library: one of the neatest recursive pointer problems ever devised. This is an advanced recursive pointer problem that uses a binary tree and a doubly linked list. You should be comfortable with pointers and recursion to understand this problem. This article introduces the problem with a few memory diagrams, gives some hints, and provides solution code in both Java and C/C++. Available in both HTML and PDF...See: TreeListRecursion.html

Or as a PDF: TreeListRecursion.pdf -- the same content, but in PDF, so both the text and images are in the one file

Big Thanks to Stanford university for providing this kind of material.

Understanding Linked List

Stanford CS Education Library: a 26 page introduction to linked lists in C/C++. Includes examples, drawings, and practice problems, and solution code. The more advanced article, Linked List Problems, has 18 sample problems with solutions.

This article introduces the basic structures and techniques for building linked lists with a mixture of explanations, drawings, sample code, and exercises. The material is useful if you want to understand linked lists or if you want to see a realistic, applied example of pointer-intensive code. Even if you never really need a linked list, they are an excellent way to learn pointers and pointer algorithms.

This article introduces the basic structures and techniques for building linked lists with a mixture of explanations, drawings, sample code, and exercises. The material is useful if you want to understand linked lists or if you want to see a realistic, applied example of pointer-intensive code. Even if you never really need a linked list, they are an excellent way to learn pointers and pointer algorithms.

Download LinkedListBasics.pdf

A quick review of linked list basics followed by 18 linked list problems, basic through advanced, with solution code in C/C++. Nobody really uses linked lists any more, so why bother with these problems? Linked lists are a superb source of complex practice problems. Link list problems are simple to define, yet can have complicated, pointer-intensive solutions (which is why they are often used on exams and in interviews). If you are serious about your pointer/algorithm skills, there's no substitute for practice and this is the place to start.For a few problems, multiple solutions are presented, such as iteration vs. recursion or dummy node vs. reference pointer. The problems are: Count, GetNth, DeleteList, Pop, InsertNth, SortedInsert, InsertSort, Append, FrontBackSplit, RemoveDuplicates, MoveNode, AlternatingSplit, ShuffleMerge, SortedMerge, SortedIntersect, Reverse, and RecursiveReverse.

Download LinkedListProblems.pdf

Binky Pointer Fun Video

A funny, good, basic and useful pointer video, please watch once:

Click here

Stanford CS Education Library: a 31 page introduction to programming with pointers and memory in C, C++ and other languages. Explains how pointers and memory work and how to use them -- from the basic concepts through all the major programming techniques. Can be used as an introduction to pointers for someone with basic programming experience or as a quick review. Many advanced programming and debugging problems only make sense with a solid understanding of pointers and memory -- this document tries to provide that understanding.

Topics include: pointers, local memory, allocation, deallocation, dereference operations, pointer assignment, deep vs. shallow copies, the ampersand operator (&), bad pointers, the NULL pointer, value parameters, reference parameters, pointer to pointers, dynamic heap memory, memory ownership models, and memory leaks. The article focuses on pointers and memory in compiled languages like C and C++ with occasional notes about Java.

Click here

Stanford CS Education Library: a 31 page introduction to programming with pointers and memory in C, C++ and other languages. Explains how pointers and memory work and how to use them -- from the basic concepts through all the major programming techniques. Can be used as an introduction to pointers for someone with basic programming experience or as a quick review. Many advanced programming and debugging problems only make sense with a solid understanding of pointers and memory -- this document tries to provide that understanding.

Topics include: pointers, local memory, allocation, deallocation, dereference operations, pointer assignment, deep vs. shallow copies, the ampersand operator (&), bad pointers, the NULL pointer, value parameters, reference parameters, pointer to pointers, dynamic heap memory, memory ownership models, and memory leaks. The article focuses on pointers and memory in compiled languages like C and C++ with occasional notes about Java.

Download PointersAndMemory.pdf

Wednesday 19 February 2014

Software-Implemented Fault Tolerance (SIFT)

In the

internet you can google acronyms like SWIFT (Software Implemented Fault

Tolerance) or SIHFT (Software-implemented Hardware Fault Tolerance). Both have

the same meaning and describe a group of methods that help to organize,

enforce, protect, optimize your software in a such way that it will be tolerant

of bit flips. In this post I would like to discuss general SIHFT

approaches and give useful links to the publications for further details.

Control

flow checking

Detection of hardware

errors using control flow checking provides the means for recognizing of

invalid control flow of the executed program. Execution of sequences of

instructions that are not permitted for the executed binary can be detected.

Control flow checking cannot detect errors, which do only influence processed

data. Usually, control flow checking is only applied for inter-basic-block

control flow. Erroneous control flow within the boundaries of basic-blocks is

not detectable. Here is a simple example. We decompose the source code (on

the instruction level) into the basic blocks. A basic block is a number of

instructions in-between two control flow jumps (branching instructions). After

that we generate unique exit-signatures for all blocks. We instrument our code

in a such way that at the end of each block we save this signature into a special

register, and than, check it at the beginning of the next block. This helps to

tolerate bit-flips in control flow instructions and prevent wrong control flow

jumps.

·

Edson Borin, Cheng Wang,

Youfeng Wu, and Guido Araujo. Software-based transparent and comprehensive

control-flow error detection. In Proceedings of the International Symposium on

Code Generation and Optimization (CGO), Washington, DC, USA, 2006. IEEE

Computer Society. 6, 7

·

Ramtilak Vemu and Jacob A.

Abraham. CEDA: Control-flow error detection through assertions. In IOLTS ’06:

Proceedings of the 12th IEEE International Symposium on On-Line Testing. IEEE

Computer Society, 2006. 6, 7

Invariant-based

methods

Algorithm-based fault

tolerance and self-checking software use invariants to check the validity of

the generated results. These methods require the existence of appropriate

invariants, which provide a good generic failure detection capability. However,

they are not easy (if not impossible) to find for most applications or limited

to specific algorithms and program sections. Generic invariants as

assertion-based loop invariant only detect significant variations of the

results reliably and may miss subtle errors or side-effects.

·

V.K. Stefanidis and K.G.

Margaritis. Algorithm based fault tolerance : Review and experimental study. In

International Conference of Numerical Analysis and Applied Mathematics, 2004.

6, 7

·

Hal Wasserman and Manuel

Blum. Software reliability via run-time result-checking. J. ACM, 1997. 6,

7

Redundancy

Other software approaches

for detecting hardware errors work with replicated execution and comparison

(voting) of the obtained results. The protected software is modified during or

before compilation – rarely, dynamic binary instrumentation is used.

Replication is applied at different levels of abstraction. A number of

approaches duplicate single instructions within one thread. Others execute

duplicates of the whole program using several threads. This methods are similar

to the typical hardware reliability approaches like double and triple module

redundancies. Duplication of the instructions helps only to detect a bit flip,

triple execution can even mask it.

·

George A. Reis, Jonathan

Chang, David I. August, Robert Cohn, and Shubhendu S. Mukherjee. Configurable

transient fault detection via dynamic binary translation. In Proceedings of the

2nd Workshop on Architectural Reliability (WAR), 2006. 7

·

C. Bolchini, A. Miele, M.

Rebaudengo, F. Salice, D. Sciuto, L. Sterpone, and M. Violante. Software and

hardware techniques for SEU detection in IP processors. J. Electron. Test.,

2008. 6, 7

·

Cheng Wang, Ho seop Kim,

Youfeng Wu, and Victor Ying. Compiler-managed software-based redun- dant

multi-threading for transient fault detection. In International Symposium on

Code Generation and Optimization (CGO), 2007. 7

Replicated execution and

comparison methods help against bit flips in CPU during instruction processing.

In a similar way, we can tolerate bit flips in memory. For this purpose, we

have to store two or three copies of our variables and compare them on

read. The main drawback of these methods is a very high performance

(execution time, memory consumption) overhead.

·

George A. Reis, Jonathan

Chang, and David I. August. Automatic instruction-level software-only recovery.

IEEE Micro, 27:36–47, January 2007. 2

·

George A. Reis, Jonathan

Chang, Neil Vachharajani, Ram Rangan, and David I. August. Swift: Software

implemented fault tolerance. In Proceedings of the international symposium on

Code generation and optimization, CGO ’05, pages 243–254, Washington, DC, USA,

2005. IEEE Computer Society. 2

Arithmetic codes

Instead of duplication, or

in addition to it, arithmetic codes can be used to detect errors. The

arithmetic codes are conserved by correct arithmetic operations that is, a

correctly executed operation taking valid code words as input produces a result

that is again a valid code word. On the other hand, faulty arithmetic

operations do not conserve the code with a very high probability, that is,

faulty operations result in a non-valid code word. Furthermore, arithmetic

codes protect data from unnoticed modifications during storage or transport on

a bus. These random modifications with high probability will not result in a

valid code word. Thus, the arithmetic codes facilitate not only the detection

of soft errors but can catch permanent hardware faults, too. AN, ANB, and ANBD

encodings are different types of arithmetic code protections. Note that in the

ISO standard for the functional safety of road vehicles (ISO 26262) encoding

with an ANBD-code is one of the two means to reach the ASIL-D level – the

highest safety level.

·

A. Avizienis. Arithmetic

Error Codes: Cost and Effectiveness Studies for Application in Digital System

Design. In Transactions on Computers, 1971. 7

·

Nahmsuk Oh, Subhasish

Mitra, and Edward J. McCluskey. ED4I: Error detection by diverse data and

duplicated instructions. IEEE Trans. Comput., 51, 2002. 7

·

onathan Chang, George A.

Reis, and David I. August. Automatic instruction-level software-only recovery.

In Proceedings of the International Conference on Dependable Systems and

Networks (DSN), Washington, USA, 2006. 7

·

Ute Schiffel, Martin Su ̈ßkraut, and Christof Fetzer.

AN-encoding compiler: Building safety-critical systems with commodity hardware.

In The 28th International Conference on Computer Safety, Reliability and

Security (SafeComp 2009), 2009. 7, 9, 10

·

Ute Wappler and Christof

Fetzer. Hardware failure virtualization via software encoded processing. In 5th

IEEE International Conference on Industrial Informatics (INDIN 2007), 2007. 7,

9, 8, 10

·

Ute Wappler and Christof

Fetzer. Software encoded processing: Building dependable systems with com-

modity hardware. In The 26th International Conference on Computer Safety,

Reliability and Security (SafeComp 2007), 2007. 7, 9, 10

Keep it Simple, Keep it Reliable

The main idea has been

already defined by the Einstein:

"Everything should be

as simple as it is, but not simpler."

Now, let me talk a bit

about existing SOFTWARE reliability models (SRM). The earliest concepts of SOFTWARE

reliability engineering were adapted from the older techniques of HW

reliability. However, the application of hardware methods to software has to be

done with care, since there are fundamental differences in

the nature of hardware and software faults. Since the last 20 years software

reliability engineering is a separate domain. H. Pham gave a classification of

actual software reliability models in his book "System software reliability". According to it,

there are the following groups of SRM: error seeding models, failure rate

models, curve fitting models, reliability growth models, time-series models, and

non-homogeneous Poisson process models. These models are based on software

metrics like lines of codes, number of operators and operands, cyclomatic

complexity, object oriented metrics and many others. An overview of SOFTWARE

complexity metrics you can find in: "Object-oriented metrics - a survey" and "A survey of software metrics".

All of the defined SRM

are Black-box Models that consider the software as an

indivisible entity. A separate domain, which is more interesting for me,

contains so-called Architecture-based SRM, like the ones described

in: "Architecture-based approach to reliability assessment of software systems"

and "An analytical approach to architecture-based software performance and

reliability prediction". These type of the models consider

software as a system of components with given failure rates or fault activation

probabilities (those can be evaluated using the black box models). Reliability

of the entire systems can be evaluated by processing information of system

architecture, failure behavior, and single component properties. Most of these

models are based on probabilistic mathematical frameworks like various Markov

chains, stochastic Petri nets, stochastic process algebra, and probabilistic

queuing networks. The architecture-based models help not only to evaluate

reliability but also to detect unreliable parts of the system.

Returning to the topic, I

want to refer to a very trivial principle: The simpler is SOFTWARE, the more

reliable is the SOFTWARE. This idea is very transparent. The majority of SOFTWARE

faults are actually bugs that have been introduced during SOFTWARE

design or implementation. Complex SOFTWARE contains more bugs. Hence, the

probability that one of these bugs will be activated is higher. This fact can

be proven by any SRM. To make a reliable SOFTWARE system you have to define a

function of this system very strictly and clear and develop the SOFTWARE

just for this function. This principle is too straightforward, but it will help

you to obtain a system "as simple as it is, but not simpler".

Software and Hardware Reliability

Majority of the dependability attributes are X-abilities. Where, X = Reliability, Maintain, Availability (well, only three of them, but you get the idea). They are so-called Non Functional Properties (NFP) of the system. The wiki gives a complete list of the NFPs.

Typically NFPs are defined in terms of probability. For instance, one of definitions of system reliability: "The probability that a system will perform its intended function during a specified period of time under stated conditions". See - the probability of blah blah blah.

This definition originally addresses to HW domain or Traditional Reliability. Using different standards, testing approaches, statistical observation we can estimate such probabilities for a HW part of the system. Moreover, we can define not only the probability of failure-free execution, but a time-depending failure rate. It usually looks like a bathtub ("bathtub curve"):

Failure rate are linked with well-known reliability metrics like MTTF (Mean Time To Failure) or MTBF (Mean Time Between Failures). HW fails at the beggining of utilization (infant mortality) and at the end - because of wear out. If you'll try to map this concept to the SW you'll see something like this:

Short explanation of the SW-curve: SW reliability mostly depends on the number of unfixed bugs within the program. The curve shows that majority of these bugs can be handled during the testing phase of SDLC. Each new upgrade contains new bugs and makes the system more unreliable. It looks fine and gives an opportunity to evaluate reliability of SW+HW systems.

But ... there is a question - how to evaluate these probabilities of failure, failure rates, numbers of bugs, whatever of the new SW product? Run it 100000 times? Using what inputs? How to obtain info about operational profile of the system before utilization? and so on.

One of the answers - by an application of Software Complexity Metrics (SCM) and Software Reliability Models (SRM). The SW reliability community is agreed with the following assumption: There is a straight forward correlation between SW reliability and a number of bugs. Something like that:

Pr ( Failure ) = N_unfixed_bugs * some_coefficient

Initial number of unfixed bugs is evaluated using SCMs. SRMs, particularly growth and curve-fitting models, model the process of bug fixing and reliability increasing with the time.

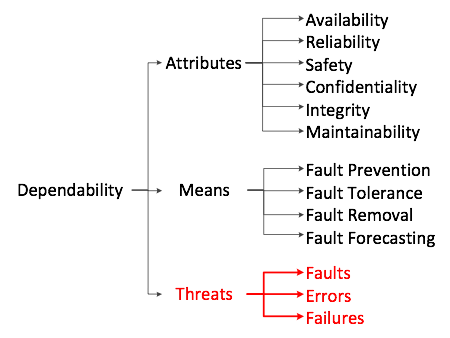

Dependable Systems: Introduction

"Fundamental Concepts of Dependability" outlined the results of nearly 20 years of activity in this domain and related ones. Introduced concept and the taxonomy will be used in my further posts. Next figure (taken from the article) shows co-called 'the dependability tree' that gives some intuition what is it all about:

Dependability is a system characteristic like functionality, performance or costs. Formal definition is as follows: "Dependability of a (computing) system is the ability to deliver service that can justifiably be trusted".

So according to "the dependability tree", we can describe it from 3 points of view: Attributes, means, and threats. Attributes - a kind of sub-characteristics of the dependability:

- Availability: readiness for correct service

- Reliability: continuity of correct service

- Safety: absence of catastrophic consequences on the user(s) and the environment

- Confidentiality: absence of unauthorized disclosure of information

- Integrity: absence of improper system state alterations

- Maintainability: ability to undergo repairs and modifications

Means - goals of dependability analysis:

- Fault prevention: how to prevent the occurrence or introduction of faults;

- Fault tolerance: how to deliver correct service in the presence of faults;

- Fault removal: how to reduce the number or severity of faults;

- Fault forecasting: how to estimate the present number, the future incidence, and the likely consequences of faults.

Threats - classification of threats:

- Fault is a defect on the system that can be activated and become a cause of an error (broken wire, electrical short, bug in a program).

- Error refers to incorrect internal state of the system or a discrepancy between the intended behavior of a system and its actual behavior inside the system boundary.

- Failure is an instance in time when a system displays behavior that is contrary to its specification.

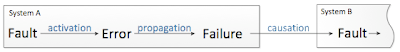

I want to tell a little bit more about the threats, because this concept is very interesting but not so obvious. Faults, errors and failures operate according to the chain shown in the next figure:

Fault activation can lead to an error. Once a fault is activated an error occurs. Examples of fault activation are execution of a line of code with a bug, an attempt to send a signal via corrupted connector or execution of a broken hardware part. An error may act in the same way as a fault. It can create further error’s conditions. An invalid state generated by the error may lead to another error or to a failure. Important to note, that failures are defined according to the system boundary. They are basically errors that have propagated out of the system and have become observable. If an error propagates outside the system boundary a failure is said to occur.

Tuesday 18 February 2014

Scope rules in C

Scope of an identifier is the part of the program where the identifier may directly be accessible. In C, all identifiers are lexically (or statically) scoped. C scope rules can be covered under following two categories.

Global Scope: Can be accessed anywhere in a program.

// filename: file1.cint a;int main(void){ a = 2;} |

// filename: file2.c// When this file is linked with file1.c, functions of this file can access aextern int a;int myfun(){ a = 2;} |

To restrict access to current file only, global variables can be marked as static.

Block Scope: A Block is a set of statements enclosed within left and right braces ({ and } respectively). Blocks may be nested in C (a block may contain other blocks inside it). A variable declared in a block is accessible in the block and all inner blocks of that block, but not accessible outside the block.

What if the inner block itself has one variable with the same name?

If an inner block declares a variable with the same name as the variable declared by the outer block, then the visibility of the outer block variable ends at the pint of declaration by inner block.

If an inner block declares a variable with the same name as the variable declared by the outer block, then the visibility of the outer block variable ends at the pint of declaration by inner block.

int main(){ { int x = 10, y = 20; { // The outer block contains declaration of x and y, so // following statement is valid and prints 10 and 20 printf("x = %d, y = %d\n", x, y); { // y is declared again, so outer block y is not accessible // in this block int y = 40; x++; // Changes the outer block variable x to 11 y++; // Changes this block's variable y to 41 printf("x = %d, y = %d\n", x, y); } // This statement accesses only outer block's variables printf("x = %d, y = %d\n", x, y); } } return 0;} |

Output:

x = 10, y = 20 x = 11, y = 41 x = 11, y = 20

What about functions and parameters passed to functions?

A function itself is a block. Parameters and other local variables of a function follow the same block scope rules.

A function itself is a block. Parameters and other local variables of a function follow the same block scope rules.

Can variables of block be accessed in another subsequent block?*

No, a variabled declared in a block can only be accessed inside the block and all inner blocks of this block. For example, following program produces compiler error.

No, a variabled declared in a block can only be accessed inside the block and all inner blocks of this block. For example, following program produces compiler error.

int main(){ { int x = 10; } { printf("%d", x); // Error: x is not accessible here } return 0;} |

Output:

error: 'x' undeclared (first use in this function)

As an exercise, predict the output of following program.

int main(){ int x = 1, y = 2, z = 3; printf(" x = %d, y = %d, z = %d \n", x, y, z); { int x = 10; float y = 20; printf(" x = %d, y = %f, z = %d \n", x, y, z); { int z = 100; printf(" x = %d, y = %f, z = %d \n", x, y, z); } } return 0;} |

Subscribe to:

Posts (Atom)